Agents are Not Enough; and Learning

- Jan 10, 2025

- 3 min read

This week, I would like to share an article entitled, “Agents are not enough” by Shah and White (Dec 2024). I am sure you will notice that this is not a typical SoTL paper however I found that it aligned well with how humans learned. I thought it might be interesting to ask GPT to “Create a summary connecting foundational learning theories to the paper.”

The emergence of agents, Sims, and Assistants in AI aligns with foundational learning theories, illuminating the ways these systems can support personalized, adaptive, and socially acceptable user interactions:

Behaviorism and Reactive Agents

Theory: Behaviorism emphasizes learning through responses to stimuli. Reactive agents embody this by acting based on environmental inputs, mirroring stimulus-response learning. However, their lack of adaptability and planning, akin to memorization in humans, limits their utility in dynamic environments.

Application: Incorporating reinforcement learning into agents enhances their ability to adapt, akin to shaping behaviors through reinforcement in behavioral psychology.

Cognitivism and Cognitive Architectures

Theory: Cognitivism focuses on internal mental processes like memory and problem-solving. Cognitive architectures mirror these processes by integrating perception, memory, and reasoning but struggle with scalability and real-time application.

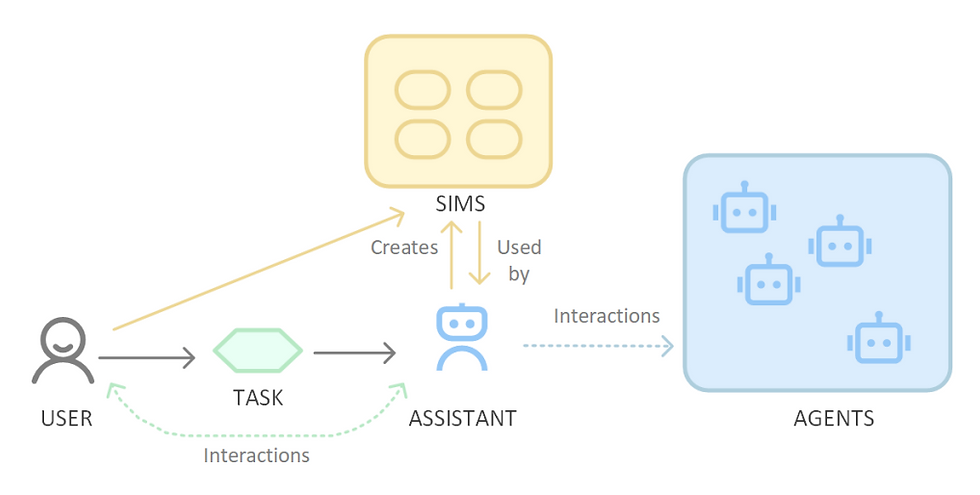

Application: The AI framework's layered approach, with Sims acting as a dynamic user model and Assistants orchestrating agent activities, exemplifies distributed cognition. This structure mirrors the human brain's compartmentalization of tasks, enhancing adaptability and scalability.

Constructivism and Personalization

Theory: Constructivism posits that learners construct knowledge through experiences and contextual interactions. Sims, representing user profiles and behaviors, align with this by enabling agents to tailor actions based on users' unique contexts and preferences.

Application: Allowing Assistants to co-create Sims based on user interactions fosters an experiential learning model, ensuring agents adapt to individual needs while preserving privacy.

Social Constructivism and Multi-Agent Systems

Theory: Learning is influenced by social interactions and collaborative. Multi-agent systems reflect this by requiring agents to coordinate effectively, akin to collaborative group problem-solving.

Application: Advanced coordination protocols and negotiation mechanisms for agents parallel effective group dynamics, ensuring seamless collaboration to achieve complex tasks.

Humanistic Theory and User Agency

Theory: Humanistic psychology emphasizes autonomy, self-actualization, and respect for individual agency. The trade-offs in user control versus agent autonomy directly touch on this theory.

Application: Assistants, acting as intermediaries between users and agents, support self-directed use of technology, allowing users to delegate tasks without relinquishing significant control.

Situated Learning and Ecosystem Interactions

Theory: Learning is embedded within the activity, context, and culture in which it occurs. The ecosystem's design of integrating Agents, Sims, and Assistants facilitates situated interactions, mirroring real-world contexts where learning and problem-solving occur.

Application: By representing user preferences and environments through Sims, and enabling Assistants to manage nuanced task execution, the ecosystem embodies contextual learning principles.

The challenges AI face—generalizing across contexts, handling complexity, and ensuring adaptability—mirror human cognitive limitations. Just as students struggle with solving tasks and transferring knowledge to novel scenarios, agents falter in scalability, coordination, and robustness when confronted with real-world variability. This similarity highlights the need for improved learning architectures, both in AI systems and human learners.

Exploration of fixing agents resonates with educational efforts to enhance student learning. AI recommendations like creating new hybrid architectures echo the Dual-Memory Model (Atkinson & Shiffrin, 1971). For instance, storing and executing workflows while reducing dependency on foundational models aligns with separating and managing working and long-term memory in students. Decomposing tasks into sub-tasks, akin to scaffolding in education, enables both AI and learners to process complex information incrementally and effectively.

The call for incorporating robust learning algorithms, such as reinforcement learning and transfer learning, parallels instructional strategies aimed at building adaptive expertise in students. Just as reinforcement learning enables agents to adjust based on outcomes, educators use feedback loops to reinforce correct responses and modify misconceptions. Similarly, transfer learning in AI aligns with teaching methods that encourage students to apply previous knowledge to novel contexts, fostering deeper understanding and adaptability.

References

Shah, C., & White, R. (2024). Agents Are Not Enough. In Proceedings of ACM Conference (Under review). ACM, New York, NY, USA, 4 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn

Comments